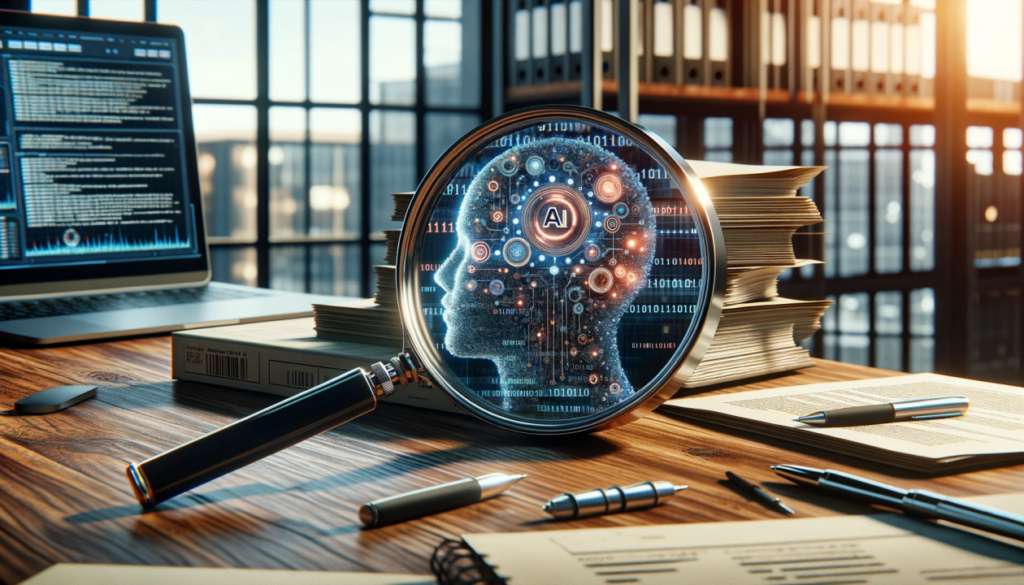

Exploring the Ethical Challenges of Generative AI

Generative AI is revolutionizing industries by creating realistic text, images, audio, and even videos. Tools like ChatGPT, DALL·E, and Stable Diffusion are reshaping how content is produced. However, this rapid advancement raises significant ethical challenges that must be addressed to ensure responsible use.

1. Misinformation and Deepfakes

One of the most pressing concerns is the potential for generative AI to spread misinformation. AI-generated text can be used to create misleading news articles, and deepfake technology can fabricate realistic images and videos. These capabilities pose a serious threat to public trust and democracy by making it difficult to distinguish real from fake content.

2. Bias and Fairness

AI models are trained on vast datasets collected from the internet, which often contain biases. As a result, generative AI can reproduce and even amplify societal prejudices, leading to biased outputs in hiring decisions, law enforcement applications, and other sensitive areas. Ensuring fairness in AI models requires continuous monitoring and bias mitigation strategies.

3. Intellectual Property and Copyright Issues

Generative AI often uses copyrighted content to train its models. This raises ethical concerns about whether AI-generated content is truly original or if it infringes upon existing intellectual property rights. Artists, writers, and creators worry about AI replicating their work without proper credit or compensation.

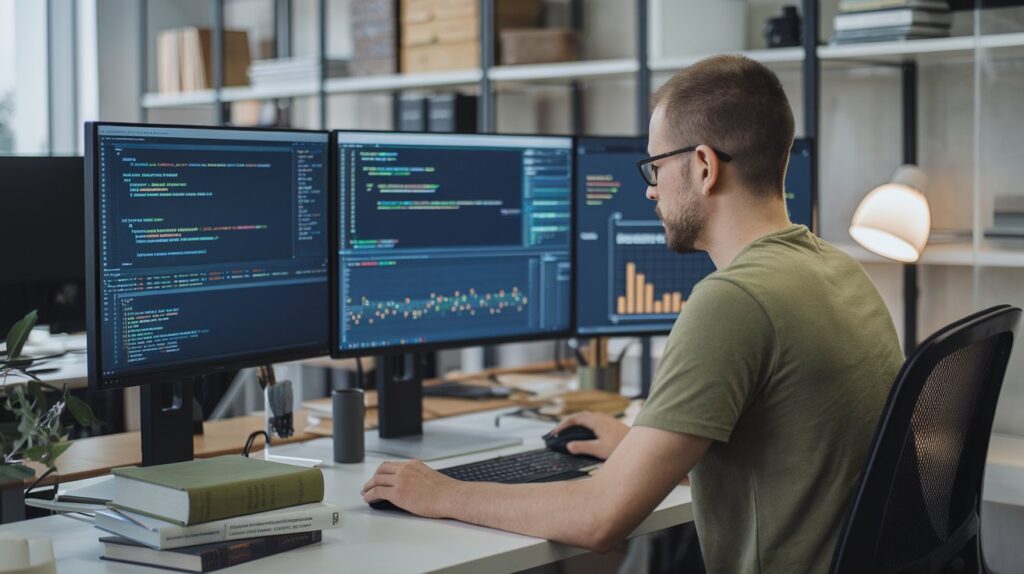

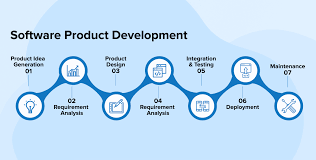

4. Job Displacement and Economic Impact

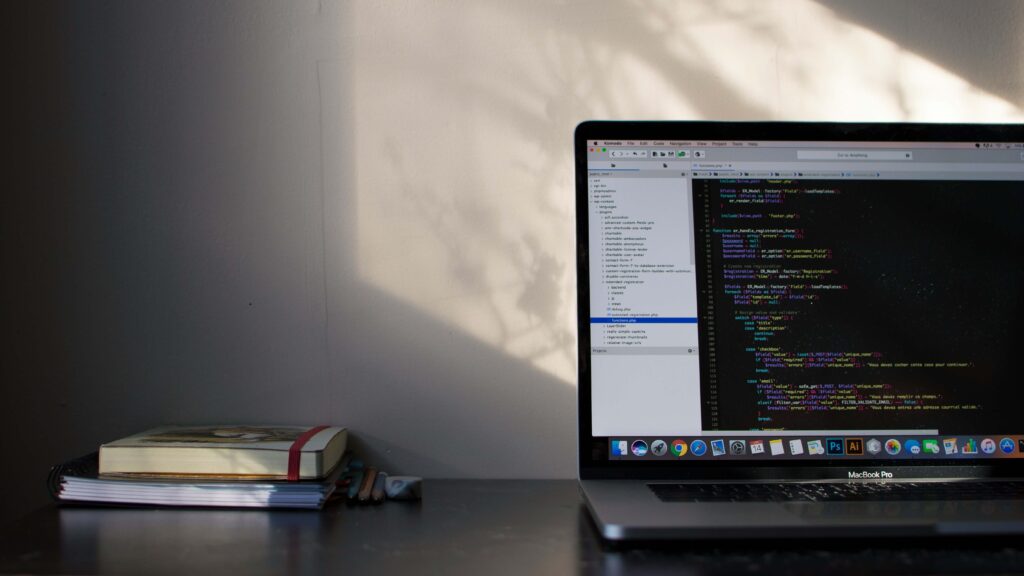

As AI becomes more capable of generating high-quality content, concerns about job displacement in creative and knowledge-based industries are growing. Writers, graphic designers, musicians, and even software developers may find their roles shifting or diminishing as businesses adopt AI-generated alternatives.

5. Privacy and Data Security

Generative AI models require vast amounts of data to function effectively. If this data includes personal information, it could lead to privacy violations. Additionally, AI-generated content could be misused to impersonate individuals, raising concerns about identity theft and fraud. read more: onlinetechlearner

6. Autonomy and Accountability

Who is responsible for AI-generated content? If an AI system generates harmful, offensive, or illegal material, should the blame lie with the developer, the user, or the AI itself? The question of accountability remains unresolved, making it difficult to regulate AI-driven technologies effectively.

7. Environmental Impact

Training large-scale AI models require significant computational power, leading to high energy consumption. The environmental impact of AI-driven systems is an ethical concern, as it contributes to carbon emissions and resource depletion.

The Role of AI Companies in India

India is emerging as a global hub for artificial intelligence development, with many companies driving innovation in this field. Some of the top AI companies in India are working on solutions that align with ethical AI development. These companies focus on various aspects of AI, including generative models, machine learning, and data security. As an artificial intelligence development company in India, businesses must prioritize responsible AI practices to mitigate the ethical challenges discussed earlier.

Conclusion

While generative AI offers incredible benefits, addressing its ethical challenges is crucial to ensuring responsible development and usage. Policymakers, AI developers, and users must work together to establish regulations and ethical guidelines that promote fairness, transparency, and accountability. Only by acknowledging these challenges can we fully harness the potential of generative AI while minimizing its risks.

English

English

Office Clearance for SMEs: Affordable Solutions Businesses

Office Clearance for SMEs: Affordable Solutions Businesses