Bots Now Dominate the Web, and That’s a Problem

In the ever-evolving digital landscape, the growing dominance of bots on the web has become a topic of increasing concern for businesses, marketers, and even everyday users. While bots, such as search engine crawlers, chatbots, and automated content generators, have undeniable benefits, their unchecked proliferation is leading to significant challenges that must be addressed. This article delves into why bots now dominate the web and the potential problems they pose to the integrity, security, and usability of the online world.

The Rise of Bots: A Double-Edged Sword

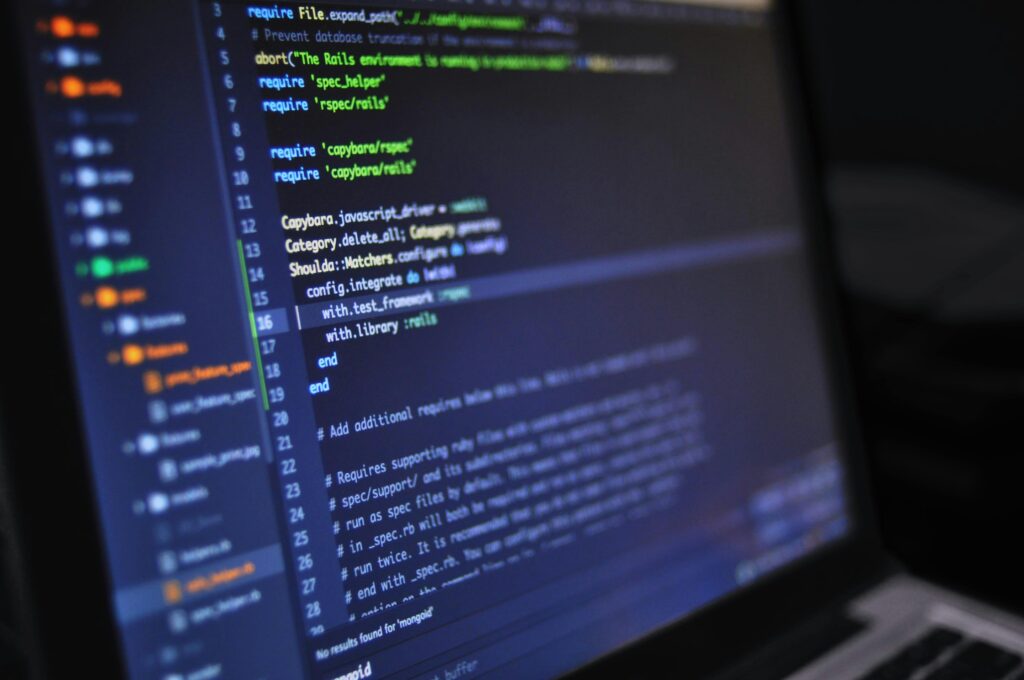

The internet has witnessed an incredible transformation over the past few years. From simple static pages to dynamic, interactive experiences, technology has revolutionized the way we interact with information online. At the heart of this transformation are bots — software applications designed to automate repetitive tasks, process large amounts of data, and perform actions on behalf of users or systems.

Bots come in various forms, each serving a distinct purpose. Some are beneficial, such as web crawlers used by search engines to index content, chatbots designed to provide customer support, and social media bots used for monitoring trends. However, the widespread use and development of malicious bots have led to a variety of negative consequences.

Malicious Bots and Their Impact on Websites

While the positive uses of bots are numerous, their malicious counterparts are causing significant issues across the web. These bots are responsible for a range of harmful activities that compromise both website performance and user experience.

1. Bot Traffic and Its Effect on Analytics

One of the major issues with bots is the disruption of web analytics. Malicious bots often flood websites with fake traffic, skewing data and making it difficult for website owners to understand their real audience. This leads to misguided decisions in marketing strategies, content development, and website design.

For instance, high bot traffic can lead to inflated bounce rates, artificial spikes in page views, and misleading conversion data. As a result, businesses may misinterpret their website’s performance and misallocate resources that could otherwise be used to improve user experience or develop targeted campaigns.

2. Content Scraping and Copyright Violations

Bots are also commonly used for content scraping—a practice where automated scripts copy content from one website and republish it on another, often without the original creator’s permission. This not only infringes on intellectual property rights but also harms original content creators by reducing the uniqueness and value of their work.

Content scraping bots often steal text, images, and even code from websites, leading to the spread of duplicate content. For websites aiming to rank well on search engines, this presents a huge challenge, as search engines like Google prioritize unique and original content. The proliferation of scraped content can dilute the value of quality, well-researched, and properly crafted web pages, affecting rankings and diminishing the overall quality of the internet.

3. Website Security Threats

The internet has become a prime target for hackers, and bots are often the tools used to exploit vulnerabilities. Credential stuffing attacks, DDoS attacks, and data breaches are all frequently executed through malicious bots. These bots can attempt to gain unauthorized access to systems, overwhelm servers with traffic, or steal sensitive data from users.

For businesses that handle personal information, such as e-commerce sites, financial institutions, or healthcare providers, the risk of bot-driven security breaches is particularly concerning. These types of attacks can result in financial losses, legal penalties, and damage to brand reputation, not to mention the severe consequences for users whose data is compromised.

4. Resource Drain and Server Overload

Bots, especially those operating in large numbers, can place an overwhelming strain on website servers. This results in slower load times, degraded user experience, and potential downtime for the website. For smaller businesses that lack the infrastructure to support such traffic, the impact can be even more significant, leading to lost opportunities and frustrated customers.

Websites with excessive bot traffic may find their server resources consumed by these bots, leaving legitimate users with slower page loads or disrupted services. Additionally, the effort required to mitigate bot traffic—through CAPTCHA challenges, IP blocking, or bot detection tools—adds an additional layer of complexity and cost to website management.

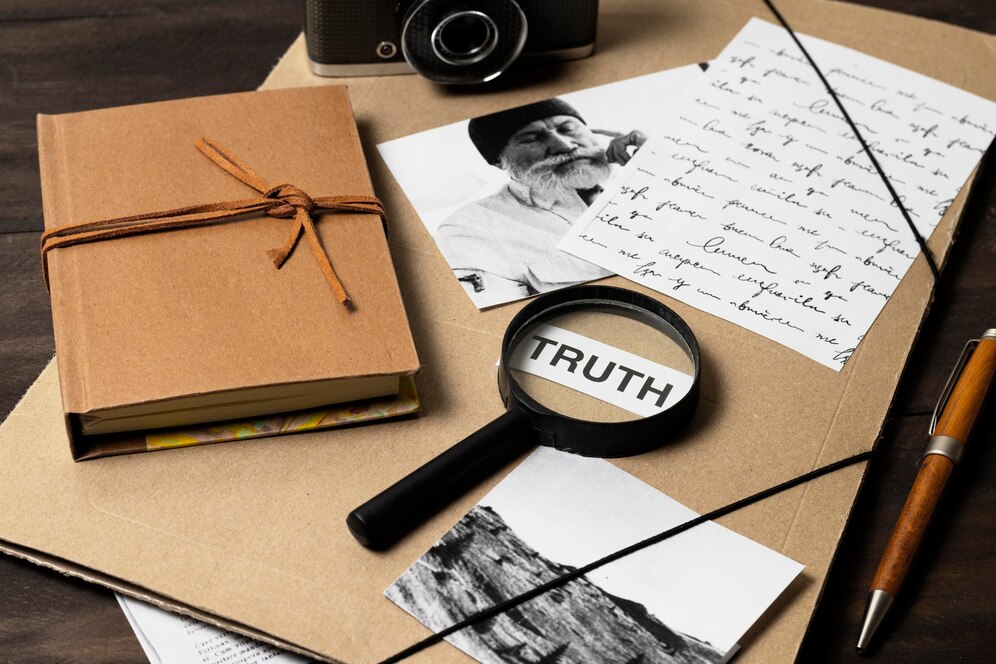

The Erosion of Authenticity in Online Interactions

One of the most concerning aspects of bot proliferation is its impact on authentic online interactions. Social media platforms, forums, and review websites have all been heavily infiltrated by bots designed to mimic human activity. These bots are often used to promote products, manipulate discussions, or spread misinformation. The result is a distorted digital environment where the line between genuine and fake interactions becomes increasingly blurred.

5. Fake Reviews and Testimonials

Bots are frequently employed to generate fake reviews and testimonials for products, services, or businesses. This creates a false perception of trustworthiness, leading consumers to make purchasing decisions based on unreliable information. The prevalence of fake reviews undermines the credibility of online platforms and erodes trust between businesses and their customers.

6. Misinformation and Manipulation

On social media platforms, bots can manipulate public opinion by amplifying specific messages or spreading misinformation. These bots can create the illusion of widespread support or opposition to particular issues, influencing political decisions, public opinion, or market trends. The rapid spread of misinformation online, especially through automated accounts, is a growing concern for both regulators and the general public.

How to Combat the Negative Effects of Bots

While bots have undoubtedly revolutionized the web in many ways, their negative consequences cannot be ignored. To mitigate these risks, businesses and website owners must implement robust strategies to safeguard their sites from malicious bot activities.

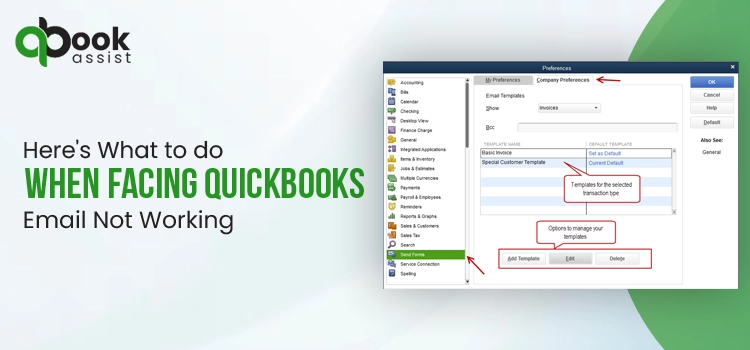

1. Implement Bot Detection and Prevention Systems

One of the most effective ways to combat bot traffic is by implementing bot detection and prevention tools. These systems analyze traffic patterns and user behavior to identify potential bots, allowing website administrators to block or limit their access. Tools like reCAPTCHA, bot protection services, and IP-based filtering are some of the most common solutions to prevent bots from overwhelming websites.

2. Enhance Website Security with Multi-Layered Defense

To safeguard against malicious bots trying to exploit website vulnerabilities, it’s essential to use multi-layered security measures. Implementing SSL encryption, strong firewalls, and regular vulnerability scans can help protect sensitive data and prevent unauthorized access. Additionally, using two-factor authentication (2FA) can make it more difficult for bots to access user accounts and gain control over website systems.

3. Monitor and Manage Analytics Data

Website owners must actively monitor their analytics data to identify any unusual spikes in traffic or suspicious patterns that could be the result of bot activity. By using advanced filtering tools, businesses can ensure that only genuine traffic is recorded and used for decision-making purposes. Regular audits and adjustments to tracking codes can also help reduce the impact of bot traffic on web analytics.

4. Educate Users About Online Security

To reduce the chances of users falling victim to bot-driven attacks, businesses should prioritize user education about online security. This includes teaching users how to recognize phishing attempts, avoid suspicious links, and use strong passwords. By empowering users to protect themselves, businesses can create a safer online environment for everyone.

The Future of the Web: Balancing Innovation and Security

As bots continue to evolve, it is clear that they are not going away. However, by addressing the problems they pose and taking proactive measures to protect websites and users, it is possible to maintain a balance between innovation and security. The future of the web depends on the ability to harness the power of bots for positive purposes while minimizing their negative impact on online experiences.

Ultimately, the key to success lies in the ongoing development of technologies that can detect and mitigate the risks associated with bots. By fostering a secure, transparent, and authentic digital ecosystem, businesses can continue to thrive while ensuring that users can engage with the web safely and confidently.

Also Read: AI Inside: The Secret Sauce Behind The Smartest Laptops Yet!

English

English