Web Scraping For Big Data: Best Ways To Extract Data

Challenges While Web Scraping

Before diving into solutions, it’s crucial to understand the common challenges faced when scraping large volumes of data:- IP Blocking – Websites monitor incoming traffic and block suspicious activity, including excessive requests from a single IP.

- CAPTCHAs – Many sites use CAPTCHAs to prevent automated access.

- Dynamic Content – Some websites load data dynamically using JavaScript, making traditional scraping difficult.

- Legal and Ethical Concerns – Certain websites have restrictions against scraping, making compliance essential.

- Rate Limiting – Websites set limits on the number of requests allowed in a specific time frame.

Best Ways To Extract Data from Bulk Websites

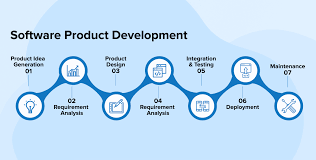

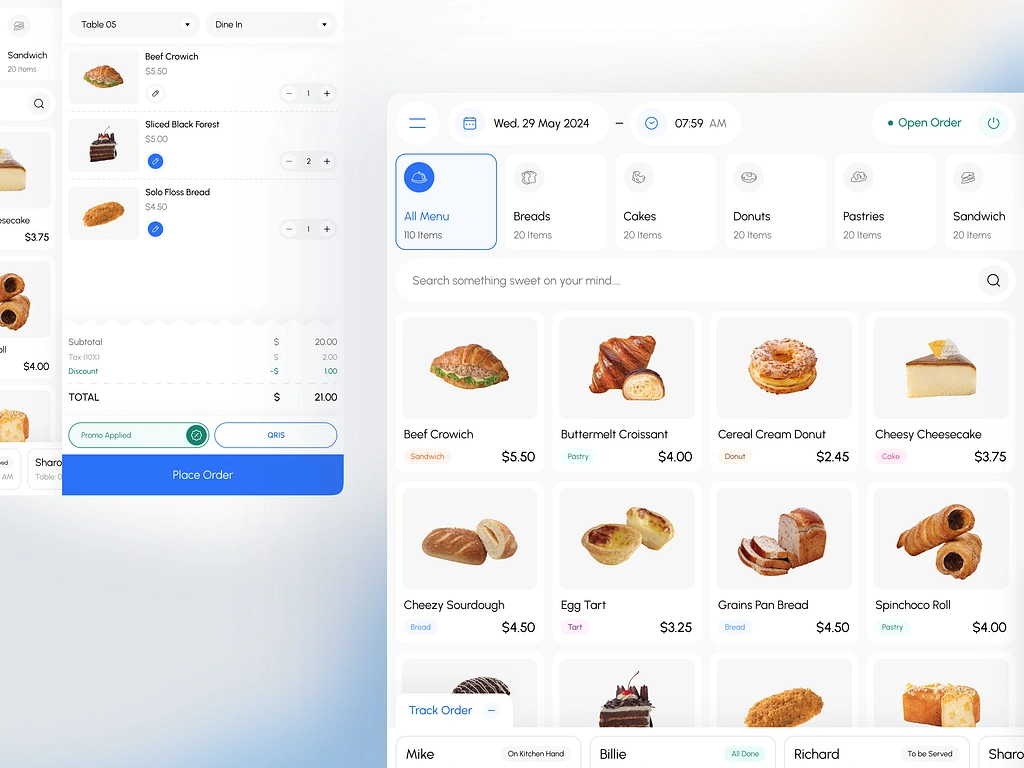

To ensure smooth and uninterrupted data extraction, follow these best practices:1. Use a Reliable Scraping Tool: United Lead Scraper

United Lead Extractor is an advanced data extraction tool designed to handle bulk website scraping efficiently. It supports automation, proxy integration, and CAPTCHA bypassing, making it ideal for large-scale scraping projects. Key features include:- Pre-built Scraping Templates – Easily extract data from popular platforms.

- Automated IP Rotation Prevents blocking by switching IPs periodically.

- JavaScript Rendering – Extracts data from dynamic websites.

- User-Friendly Interface – Allows both beginners and experts to scrape data effortlessly.

2. Rotate IPs Using Proxies

One of the most effective ways to prevent IP blocking is by using rotating proxies. This ensures that requests appear as if they are coming from different users. There are three main types of proxies:- Residential Proxies – They provide high anonymity as they use real IPs.

- Datacenter Proxies – They are Fast but more likely to be detected.

- Rotating Proxies – Automatically change IPs to avoid detection.

3. Mimic Human Behavior

To avoid detection, your data scraping bot should behave like a human:- Randomize User-Agent Strings – Use different browser headers to prevent pattern detection.

- Introduce Delays Between Requests – Mimic natural browsing behavior by adding time gaps between requests.

- Use Headless Browsers – Tools like Selenium and Puppeteer can simulate real user interactions.

4. Bypass CAPTCHAs

Many websites use CAPTCHAs to block automated bots. To bypass them:- Use AI-based CAPTCHA solvers integrated with United Lead Scraper.

- Employ third-party CAPTCHA-solving services like 2Captcha or Anti-Captcha.

- Implement session persistence to reduce CAPTCHA triggers.

5. Scrape Dynamically Loaded Content

Some websites load content dynamically using JavaScript frameworks like React, Angular, or Vue.js. To extract such data:- Use headless browsers like Puppeteer or Selenium to render JavaScript.

- Leverage United Lead Scraper’s built-in JavaScript rendering feature.

- Extract API endpoints used by the website and fetch data directly from them.

6. Follow Website Policies and Legal Guidelines

Ethical web scraping is crucial to avoid legal issues. Always:- Check the robots.txt file to understand scraping restrictions.

- Respect rate limits to avoid overloading the website.

- Avoid scraping personal or sensitive data without permission.

English

English